Increase Disk Space for Linux Virtual Machine Created by VMware

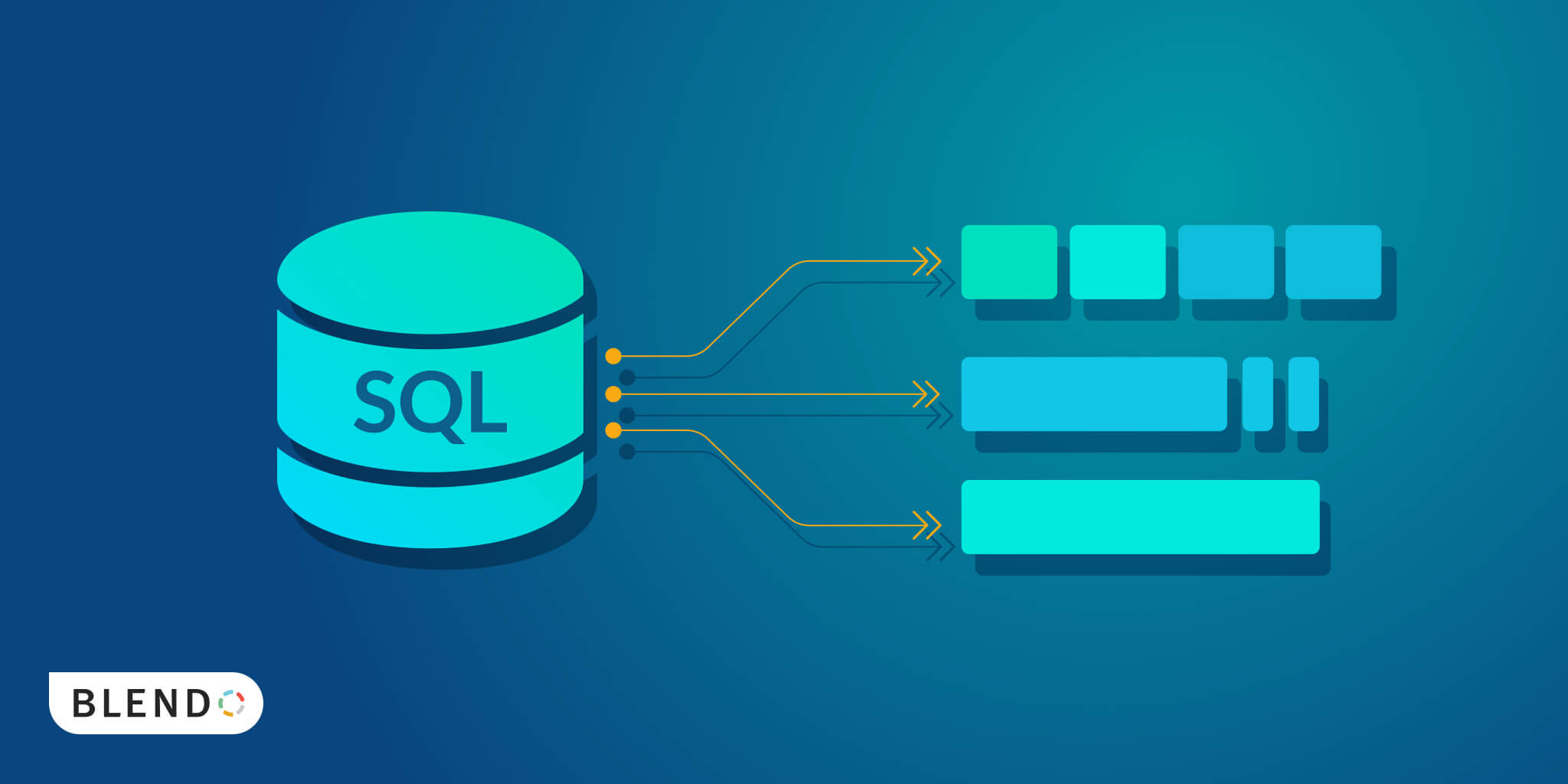

Virtual machine is a major way to setup Linus development environment in Windows PC. One of the great things about newly Linux distro such as RHEL, Ubuntu, Centos etc is that most of them adopt to a LVM (Logical Volume Manager) filesystem which is a natural fit for Linux virtualized system like VMware or VitualBox.

The main characteristic for LVM is that it is able to adjust filesystem volume dynamically by integrating multiple partitions which feel like one partition on one disk after LVM adjustment, moreover, adding and remove partitions to increase and shrink disk space also become very easy than before and this feature applies virtualized hard drive and makes it very easy to grow the disk space within few steps setup. You might ask what is the point to do that, I would say if you make virtual machine as your main development environment, the disk space is going to run out quickly as time goes by when more and more tools and libraries are installed. I have my VM hard disk initial 20GB by default setting but after I setup all my environment items my root directory only has 300MB space left.

I will grow disk space with my Linux virtual machine to 40GB:

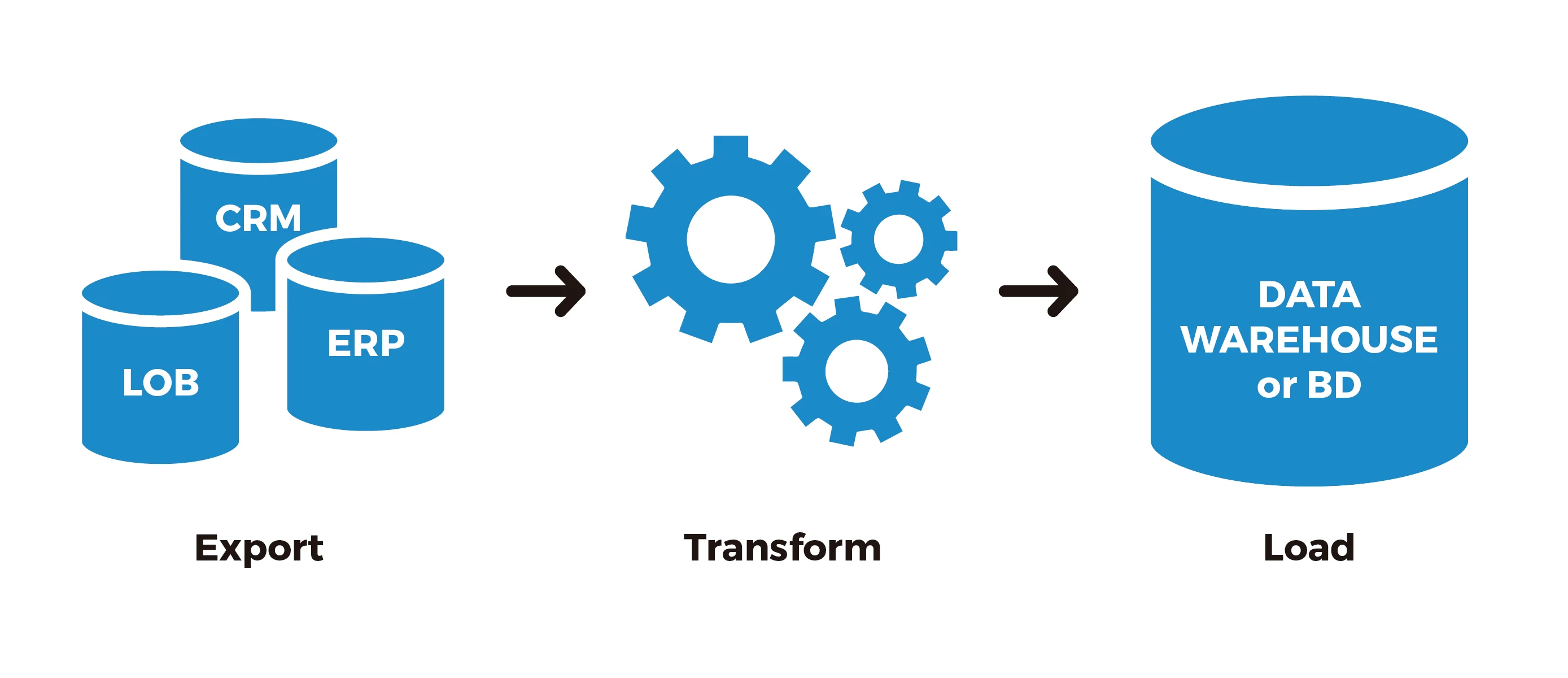

Before we make our hands dirty, it’s necessary to get some basic understanding about the LVM in terms of 3 key things, they are PV (physical volumes), VG (volume groups) and LV (logical volumes). LVM integrates multiple partitions or disks into a big independent partition (VG), then formats it to create multiple logical volumes (LV) to be able to mount filesystem.